TensorFlow is a powerful open source software library for numerical calculations, especially suitable for large-scale machine learning tasks. In this tutorial, we will learn step by step how to use TensorFlow to train a simple neural network model and use this model to make predictions.

Basic model training

Environmental preparation

First, make sure you have Python and TensorFlow installed. TensorFlow can be installed by running the following command:

pip install tensorflow

data preparation

Use TensorFlow’s built-in MNIST dataset, which is a handwritten digit recognition dataset. The MNIST dataset contains 60,000 training samples and 10,000 test samples.

import tensorflow as tf

mnist = tf.keras.datasets.mnist

(x_train, y_train), (x_test, y_test) = mnist.load_data()

x_train, x_test = x_train / 255.0, x_test / 255.0 #

Build model

Next, build a simple sequence model. The model consists of two densely connected layers and a softmax layer, which will return a probability distribution over 10 categories.

model = tf.keras.models.Sequential([

tf.keras.layers.Flatten(input_shape=(28, 28)),

tf.keras.layers.Dense(128, activation='relu'),

tf.keras.layers.Dropout(0.2),

tf.keras.layers.Dense(10, activation='softmax')

])

Compile model

There are some settings that need to be specified before training the model, which are done during the compilation step of the model. adamOptimizer and sparse_categorical_crossentropyloss function will be used here . Accuracy is also used as an evaluation metric.

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

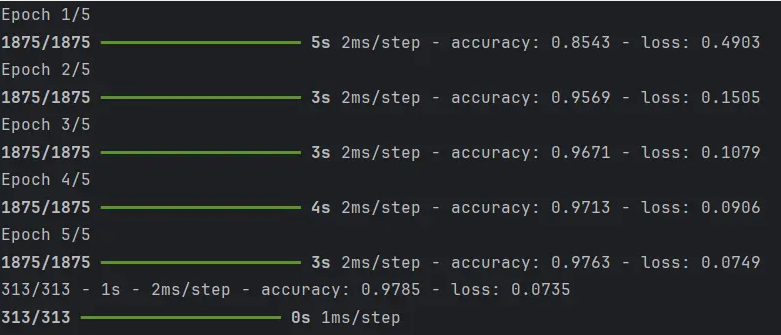

Training model

We can now use fitmethods to train our model. Pass in the training data set and specify the number of training cycles (epoch).

model.fit(x_train, y_train, epochs=5)

Evaluation model

After training is completed, the test data set can be used to evaluate the performance of the model.

model.evaluate(x_test, y_test, verbose=2)

Use model

Finally, the trained model can be used to predict new data.

import numpy as np

predictions = model.predict(x_test)

print(np.argmax(predictions[0])) Show results:

Complete code

import tensorflow as tf

import numpy as np

mnist = tf.keras.datasets.mnist

(x_train, y_train), (x_test, y_test) = mnist.load_data()

x_train, x_test = x_train / 255.0, x_test / 255.0 #

model = tf.keras.models.Sequential([

tf.keras.layers.Flatten(input_shape=(28, 28)), #

tf.keras.layers.Dense(128, activation='relu'), #

tf.keras.layers.Dropout(0.2), #

tf.keras.layers.Dense(10, activation='softmax') #

])

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

model.fit(x_train, y_train, epochs=5)

model.evaluate(x_test, y_test, verbose=2)

predictions = model.predict(x_test)

print(np.argmax(predictions[0])) #

Advanced model training: Build and train a convolutional neural network (CNN) using TensorFlow

This advanced example will use TensorFlow to build and train a convolutional neural network (CNN) to improve the accuracy of handwritten digit recognition. CNN is a powerful tool in deep learning for image recognition and classification.

data preparation

Still using the MNIST data set. As in the previous example, the dataset is loaded and normalized, but this time the image data also needs to be reshaped to fit the CNN model.

import tensorflow as tf

mnist = tf.keras.datasets.mnist

(x_train, y_train), (x_test, y_test) = mnist.load_data()

x_train, x_test = x_train / 255.0, x_test / 255.0

#

x_train = x_train[..., tf.newaxis]

x_test = x_test[..., tf.newaxis]

Build CNN model

Build a simple CNN model, which contains several convolutional and pooling layers.

model = tf.keras.models.Sequential([

tf.keras.layers.Conv2D(32, (3, 3), activation='relu', input_shape=(28, 28, 1)),

tf.keras.layers.MaxPooling2D((2, 2)),

tf.keras.layers.Conv2D(64, (3, 3), activation='relu'),

tf.keras.layers.MaxPooling2D((2, 2)),

tf.keras.layers.Conv2D(64, (3, 3), activation='relu'),

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(64, activation='relu'),

tf.keras.layers.Dense(10, activation='softmax')

])

Compile model

Similar to the previous example, the model needs to be compiled, specifying the optimizer, loss function, and evaluation metrics.

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

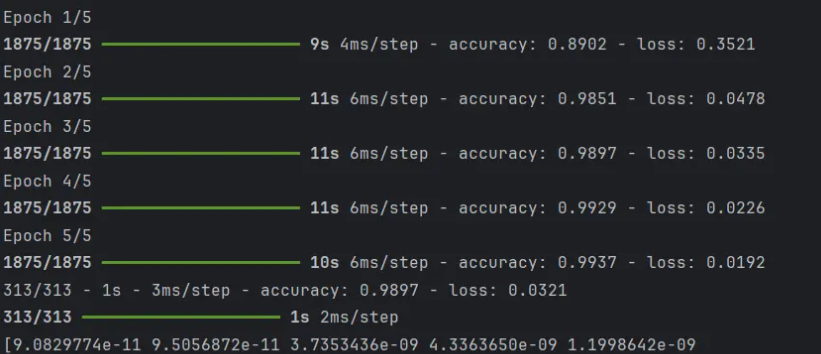

Training model

Method fitto train our CNN model.

model.fit(x_train, y_train, epochs=5)

Evaluation model

Evaluate the performance of the model using the test dataset.

model.evaluate(x_test, y_test, verbose=2)

Use model

After training is completed, use the model to make predictions on new image data.

predictions = model.predict(x_test)

print(predictions[0])

Results display:

Complete code

import tensorflow as tf

mnist = tf.keras.datasets.mnist

(x_train, y_train), (x_test, y_test) = mnist.load_data()

x_train, x_test = x_train / 255.0, x_test / 255.0

#

x_train = x_train[..., tf.newaxis]

x_test = x_test[..., tf.newaxis]

model = tf.keras.models.Sequential([

tf.keras.layers.Conv2D(32, (3, 3), activation='relu', input_shape=(28, 28, 1)),

tf.keras.layers.MaxPooling2D((2, 2)),

tf.keras.layers.Conv2D(64, (3, 3), activation='relu'),

tf.keras.layers.MaxPooling2D((2, 2)),

tf.keras.layers.Conv2D(64, (3, 3), activation='relu'),

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(64, activation='relu'),

tf.keras.layers.Dense(10, activation='softmax')

])

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

model.fit(x_train, y_train, epochs=5)

model.evaluate(x_test, y_test, verbose=2)

predictions = model.predict(x_test)

print(predictions[0])

end

By building and training a convolutional neural network model, the accuracy of handwritten digit recognition can be improved. By learning local patterns of images, such as edges, textures, etc., CNN has stronger processing capabilities for image data than the previous simple densely connected network. This example shows how to use TensorFlow to quickly build and train a relatively complex CNN model, and is a good starting point for in-depth study of deep learning and TensorFlow. As you learn more about deep learning, developers will be able to build more complex and powerful models to solve a wider range of problems.