Artificial Intelligence is almost as old as computer science. It was first proposed in the 1950s and became a relatively mature theory in the 1960s. However, due to the constraints of computing power and data, major breakthroughs (deep learning and CNN) did not occur until the first decade of the 21st century, and officially broke out (large models) in the second decade. In the fall of 2022, OpenAI’s ChatGPT 3 was released, allowing AI to reach the “human-like” level for the first time, and the Large Language Model (LLM) also officially entered the public eye. Since then, big models have begun to dominate the screen and dominate the charts, and people are talking about big models. If you don’t mess around with large models, you seem to be a primitive person, and you can’t even get a word in when chatting with others. After learning from the pain, let’s study the large model today to keep up with the pace.

Note: The often referred to large model is a loose abbreviation of large language model. A better term is large language model (Large Langauge Model) or its English abbreviation LLM. This article may use them mixedly.

The best way to understand something is to first learn to use it, so we start by using large models.

Deploy LLM locally

There are many ways to experience large models. The most convenient is to directly use the chatbots provided by major AI manufacturers such as ChatGPT or ChatGLM. It is indeed interesting. We can find that the difference between LLM and previous artificial intelligence is that it can understand human speech and speak like human speech, which means that it has truly reached the “human-like” level.

As an orangutan developer, just playing like this is too boring. The most suitable way to develop an orangutan is to toss it by yourself. Yes, we need to deploy LLM locally, so that it will be more enjoyable to play. To save money, local deployment has the following benefits:

You can have an in-depth understanding of LLM’s technology stack and try it yourself to know what is there and what is needed.

More secure and able to protect personal privacy. Needless to say, it is convenient to use the Chat service or API directly, but it only transfers the data to other people’s servers. If there are some words that are inconvenient to say or not suitable for others to read, they will definitely not be used. But if you use LLM deployed locally, you don’t have to worry.

Customize LLM to create a personal knowledge base or knowledge assistant.

Perform model fine-tuning and deep learning.

Accumulate experience. If you move to the cloud in the future, you can quickly deploy it once you have experience.

There are not many benefits to deploying LLM locally. The only disadvantage is that LLM is very hardware-intensive and expensive to run. It costs a lot of money to run smoothly.

Open Source LLM Hosting Platform

Obviously, in order to deploy LLM locally, the model itself must be open source, so only open source LLM can be deployed locally, and closed source models can only be used through its API.

So, what you must understand before you bother is where to find open source LLM. Fortunately, there are not only many open source LLMs, but also very convenient LLM hosting platforms. The LLM hosting platform is like GitHub for open source code. After major companies complete research and development and testing, they will release LLM to the hosting platform for people to use.

The most famous one is HuggingFace. It not only provides LLM hosting, but also has an LLM evaluation system that has almost become an industry standard. It regularly releases evaluations of the latest models to help everyone choose the right LLM. It also provides a Python library for downloading and using LLM, the famous transformers. But alas, we don’t have access (you know).

Don’t panic, for technical websites that are inaccessible, there must be domestic mirrors, which are very easy to use and can be accessed very quickly.

Tsinghua University also had a mirror, but it was no longer available.

How to run LLM locally

Here are some very convenient ways to deploy and run LLM locally that can be learned in five minutes.

Ollama

The most convenient way is to use Ollama, which is very convenient to use. After installation, you can run and use LLM with just one sentence: ollama run llama3

This will run Meta’s latest LLaMA3 model. Of course, before running a specific model, it is best to read its documentation to confirm whether the hardware configuration meets the model requirements. For example, the author whose family is relatively poor uses the beggar’s version of MBP (16G RAM + 4G GPU) and can only choose models within 8B. Those with better family conditions can get the 13B model, but it is best not to try the 33B model.

Ollama is very easy to use. It is C/S type, which means that it will start a small HTTP server to run LLM. In addition to directly using Ollama’s own terminal, it can also serve as a model API for other tools to use, such as For example, LangChain can seamlessly connect with Ollama.

Its disadvantage is that it originates from Mac and is most friendly to Mac. Other systems such as Windows and Linux are only supported later. In addition, it is relatively simple to use, with only a command line terminal, so a better way is to use Ollama to manage and run LLM, but then use other tools to build a more usable terminal.

LM Studio

LM Studio is an integrated, user-friendly, open source LLM application with a beautiful interface. It integrates LLM downloading, running and using, and has a very easy-to-use graphical terminal. The disadvantage is that it has high hardware requirements, and it cannot be used as an API and cannot be connected with other tools.

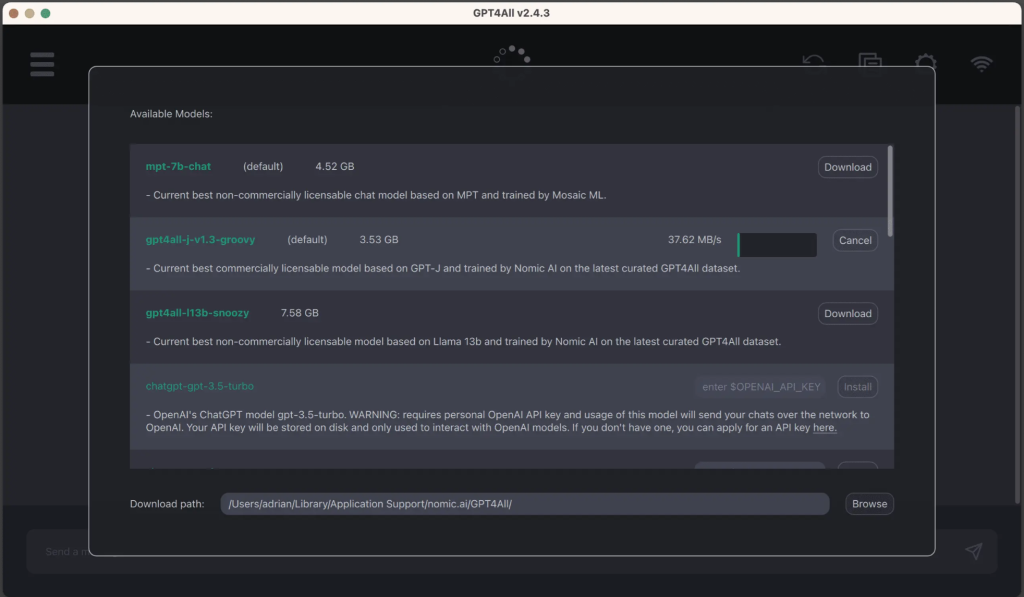

GPT4All

GPT4All is an integrated, user-friendly tool similar to LM Studio. In addition to convenient downloading, it also provides a convenient graphical terminal to use LLM. In addition to using the downloaded LLM, it also supports API, and in addition to normal Chat, it can also directly process documents, that is, it uses documents as input to LLM. Its hardware requirements are not as high as LM Studio. The disadvantage is that it does not seem to be Mac-friendly because it requires the latest version of MacOS.

Summarize

This article introduces several very convenient ways to run LLM locally. According to the characteristics of the tool, if you are using a Mac or want to use it in combination with other tools, it is recommended to use Ollama. After all, it is for Mac The most friendly; if the hardware is better, use LM Studio, otherwise use GTP4ALL.