If you want to build your own Agent, you cannot avoid dealing with LangChain.

So this article will introduce LangChain to everyone, know what it is, how to use it, what can be done based on LangChain, and what are its problems.

LangChain has a lot of written and video materials, so I won’t repeat them. I will add relevant materials at the end of the article for interested friends to learn by themselves.

I believe that many people read my article because they don’t understand LangChain or Agent. Moreover, most people’s time is also precious and they don’t have the time to spend a few days to study systematically. Moreover, they are not sure whether learning LangChain is helpful. Is your job helpful?

So I try to use the simplest language to explain the situation.

We want to build our own AI system (Agent)

Suppose we want to build our own question and answer system. The question and answer system needs to use our private database.

What should we do? We can first consider a lowest-cost POC solution.

- Use the openapi of ChatGPT, the most powerful large language model

- Write a code in Python, based on openai, and then do knowledge base Q&A, multi-file slicing and vectorization, and make RAG yourself

The idea is this, but writing it all by yourself is still a bit difficult unless you are a great expert. And as the complexity of the project increases, the architecture of the entire project will be very critical.

At this time, some experts may say, teacher, you can use GPTs or other agent platforms to complete a question and answer system for your own knowledge base without coding.

That’s right, but here I just want to use the question and answer system that everyone knows as an example, because you can build a much more complex system than this with UF LangChain. For example, you can build your own Agent platform based on LangChain.

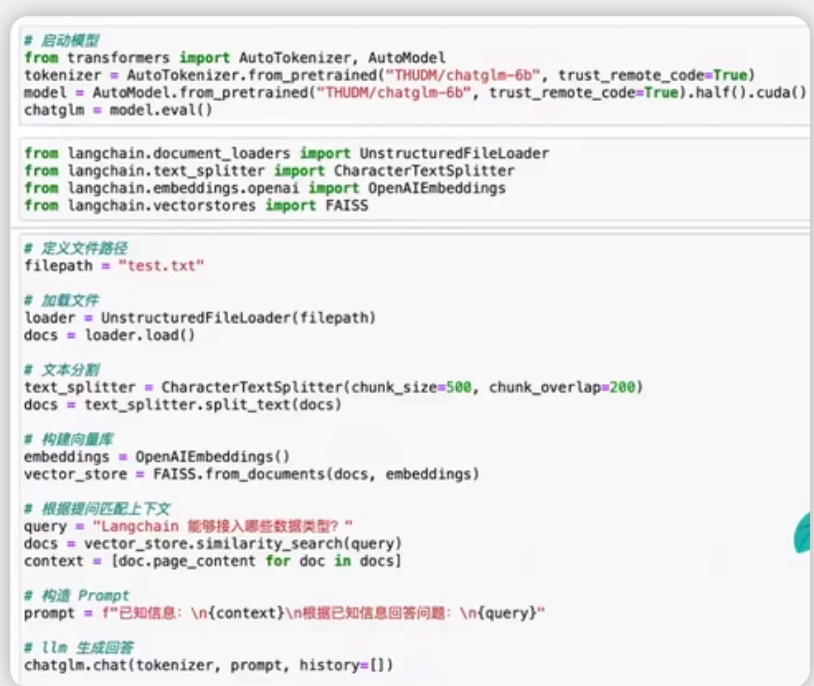

Here is an example, using the large language model ChatGLM-6b that can be deployed locally, and the local knowledge base test.txt, a dozen simple lines of code can complete the question and answer system we want to build, including loading files and cutting file content. , vectorize, and then assemble Prompt based on the vectorized content and Query. It’s that simple.

Of course, the introduction of LangChain also brings many side effects. Friends who have developed low-code must know that although using graphical methods can reduce the complexity of system development, it also reduces the performance of the system. It turns out that the most streamlined code only takes 3 minutes. The execution time may be extended several times.

LangChain core functions

Okay, now that we have confidence, let’s learn what capabilities LangChain has.

TemplatePromptTemplate

1from langchain.prompts import PromptTemplate

2

3prompt = PromptTemplate(

4 input_variables=["product"],

5 template="What is a good name for a company that makes {product}?",

6)

7print(prompt.format(product="colorful socks"))

Output: What is a good name for a company that makes colorful socks?

You can add variables to Prompt through PromptTemplate. It’s very simple.

ChainChain

1from langchain.prompts import PromptTemplate

2from langchain.llms import OpenAI

3

4llm = OpenAI(temperature=0.9)

5prompt = PromptTemplate(

6 input_variables=["product"],

7 template="What is a good name for a company that makes {product}?",

8)

9

10from langchain.chains import LLMChain

11chain = LLMChain(llm=llm, prompt=prompt)

12

13chain.run("colorful socks")

Output: # -> ‘\n\nSocktastic!’

In this way, LangChain’s Chain is used, which is still very simple.

AgentAgent

In order to use agents well, you need to understand the following concepts:

- Tools: Functions that perform specific tasks. This can be: Google search, database lookup, Python REPL, other links. The tool’s interface is currently a function that is expected to have a string as input and a string as output.

1from langchain.agents import load_tools

2from langchain.agents import initialize_agent

3from langchain.agents import AgentType

4from langchain.llms import OpenAI

5

6#

7llm = OpenAI(temperature=0)

8

9#

10tools = load_tools(["serpapi", "llm-math"], llm=llm)

11

12#

13agent = initialize_agent(tools, llm, agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION, verbose=True)

14

15#

16agent.run("What was the high temperature in SF yesterday in Fahrenheit? What is that number raised to the .023 power?")

Output:

Entering new AgentExecutor chain…

I need to find the temperature first, then use the calculator to raise it to the .023 power.

Action: Search

Action Input: “High temperature in SF yesterday”

Observation: San Francisco Temperature Yesterday. Maximum temperature yesterday: 57 °F (at 1:56 pm) Minimum temperature yesterday: 49 °F (at 1:56 am) Average temperature …

Thought: I now have the temperature, so I can use the calculator to raise it to the .023 power.

Action: Calculator

Action Input: 57^.023

Observation: Answer: 1.0974509573251117

Thought: I now know the final answer

Final Answer: The high temperature in SF yesterday in Fahrenheit raised to the .023 power is 1.0974509573251117.

Finished chain.

After joining the Agent, does it feel a bit intelligent? It knows how to search, observe, use tools, and finally form conclusions on its own.

MemoryMemory

All the tools and agents we’ve experienced so far have been stateless.

But generally, you might want the chain or agent to have some notion of “memory” so that it can remember information about its previous interactions.

The simplest and clearest example of this is when designing a chatbot – you want it to remember previous messages so that it can leverage the context of those messages to have a better conversation.

ConversationChain This is a “short term memory”.

enter:

1from langchain import OpenAI, ConversationChain

2llm = OpenAI(temperature=0)

3#

4conversation = ConversationChain(llm=llm, verbose=True)

5output = conversation.predict(input="Hi there!")

6print(output)

7

output

1> Entering new chain...

2Prompt after formatting:

3The following is a friendly conversation between a human and an AI. The AI is talkative and provides lots of specific details from its context. If the AI does not know the answer to a question, it truthfully says it does not know.

4Current conversation:

5Human: Hi there!

6AI:

7> Finished chain.

8' Hello! How are you today?'

enter

1output = conversation.predict(input="I'm doing well! Just having a conversation with an AI.")

2print(output)

output

1> Entering new chain...

2Prompt after formatting:

3The following is a friendly conversation between a human and an AI. The AI is talkative and provides lots of specific details from its context. If the AI does not know the answer to a question, it truthfully says it does not know.

4Current conversation:

5Human: Hi there!

6AI: Hello! How are you today?

7Human: I'm doing well! Just having a conversation with an AI.

8AI:

9> Finished chain.

10" That's great! What would you like to talk about?"

LangChain specifically encapsulates the chat model

chat model

The currently supported message types in LangChain are

AIMessage,HumanMessage,SystemMessage, andChatMessage,ChatMessagewhich accept any role parameters. Most of the time, you only need to deal withHumanMessage,AIMessage, andSystemMessage.

1from langchain.chat_models import ChatOpenAI

2from langchain.schema import (

3 AIMessage,

4 HumanMessage,

5 SystemMessage

6)

7chat = ChatOpenAI(temperature=0)

8chat([HumanMessage(content="Translate this sentence from English to French. I love programming.")])

9# -> AIMessage(content="J'aime programmer.", additional_kwargs={})

You can also pass multiple messages for OpenAI’s gpt-3.5-turbo and gpt-4 models.

1messages = [2 SystemMessage(content="You are a helpful assistant that translates English to French."),3 HumanMessage(content="Translate this sentence from English to French. I love programming.")4]

5chat(messages)

6# -> AIMessage(content="J'aime programmer.", additional_kwargs={})

You can go a step further and use generatecompletions to be generated for multiple sets of messages.

This will return a with additional messageparameters LLMResult.

1batch_messages = [2 [3 SystemMessage(content="You are a helpful assistant that translates English to French."),4 HumanMessage(content="Translate this sentence from English to French. I love programming.")5 ],

6 [7 SystemMessage(content="You are a helpful assistant that translates English to French."),8 HumanMessage(content="Translate this sentence from English to French. I love artificial intelligence.")9 ],

10]

11result = chat.generate(batch_messages)

12

13result

14# -> LLMResult(generations=[[ChatGeneration(text="J'aime programmer.", generation_info=None, message=AIMessage(content="J'aime programmer.", additional_kwargs={}))], [ChatGeneration(text="J'aime l'intelligence artificielle.", generation_info=None, message=AIMessage(content="J'aime l'intelligence artificielle.", additional_kwargs={}))]], llm_output={'token_usage': {'prompt_tokens': 71, 'completion_tokens': 18, 'total_tokens': 89}})