Core Technology

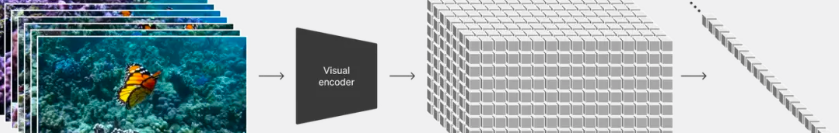

video compression network

OpenAI has developed a new network that simplifies video data so that the video can be saved in a smaller space. It works by receiving raw videos as input and then converting these videos into a more compact representation that is both time and space efficient. Sora then trains on this reduced representation space so that it can then generate new video content based on this information.

To be able to recreate the video from these simplified representations, OpenAI also trained an additional model that was tasked with converting these latent representations back to the original pixel form of the video. The idea is inspired by large language models, which are able to process different types of text information, such as codes and mathematical formulas, and convert them into unified text “tokens”. Similarly, Sora uses visual “patches,” which are small chunks of information extracted from videos.

Previous research such as OpenAI’s Clip has proven that dividing visual data into small pieces is an effective processing method. Now, they have further explored this idea in their work on video compression networks, which convert high-dimensional video data into “patches”, first compressing the video into a low-dimensional “latent space”, and then dividing the video into spatiotemporally “patches”. patches”. In short, in this way, OpenAI can process and store video information more efficiently.

Technical Difficulties

The video compression network can be analogized to a certain extent to the variational autoencoder (VAE) in the latent diffusion model. Variational autoencoders are used to learn latent representations of data and can be used to compress data so that it can be stored in a smaller size. Similarly, video compression networks are used to compress video data.

However, the “compression ratio” mentioned here refers to the ratio between the size of the space occupied by the compressed video data and the size of the original video data. At present, there is no fixed standard for the specific compression rate of video compression networks, because it depends on factors such as model design, training, and compression algorithms.

In order to ensure that video features are better preserved during the compression process, researchers need to explore more advanced compression technologies and algorithms, which may include improving the model structure, optimizing the training process, and adopting more efficient encoding methods. These research efforts are ongoing to find a way to compress video while preserving video quality as much as possible.

Scaling Transformers for video generation

Sora is a diffusion model whose task is to receive some noisy video blocks and a text prompt, and then predict what these video blocks would look like originally. It is worth noting that Sora is built on the highly extensible Transformers model. The Transformers model has shown strong scalability in the field of large language models, so OpenAI believes that they can apply many of the techniques and methods used in large language models to Sora.

In Sora’s research, OpenAI found that the diffusion Transformers model is very suitable for generating video content, and this model has good scalability and can handle increasingly larger video data and more complex generation tasks.

Technical Difficulties

To train a scalable Transformer model, you first need to effectively process the video data and convert it into small patches. This process may encounter some challenges, such as how to enable the model to handle long context of up to one minute, how to reduce error accumulation during video processing, and how to ensure that the video Quality and consistency of objects.

In addition, the Transformer model needs to be able to handle multiple types of data at the same time, such as video, image, and text. This is so-called multi-modal support. This means that models need to be able to understand the connections between these different modalities and use this information to generate more accurate and coherent video content. In general, training a scalable Transformer model requires solving these technical problems to ensure that the model can efficiently process video data and generate high-quality video content.

language understanding

OpenAI discovered that training a generative system capable of converting text into video would require large amounts of video data with corresponding text captions. Therefore, OpenAI applied the title generation technology used in DALL·E 3 to the video field and trained a model that can generate highly descriptive video titles. This model generates high-quality text captions for all video data, and then these videos and their high-quality captions are used together as video-text pairs for training. Such high-quality training data ensures a strong match between text (prompt) and video data.

In the generation phase, Sora will use OpenAI’s GPT model to optimize and rewrite the text prompt provided by the user, generating a new prompt that is both high-quality and well-descriptive. This improved prompt is then fed into the video generation model to complete the video generation process.

Technical Difficulties : To train a high-quality video caption generation model, a large amount of high-quality video data is required. These data not only need to cover various types of video content, such as movies, documentaries, games, videos rendered by 3D engines, etc., but also need to ensure the diversity and versatility of the data.

The data preparation process includes two important steps: data collection and labeling. Annotation involves precisely slicing long videos so that the model can generate accurate captions for each video clip. This means annotators need to watch the video carefully and provide precise descriptions for each clip.

Since high-quality video data has always been a scarce resource in China, this situation may improve with the development of domestic short video services. The rise of short video platforms may accelerate the collection and acquisition of high-quality short video content in Chinese, which can be used to train video title generation models, thereby improving the performance and accuracy of the models.

World models, emergent simulation capabilities

This description refers to the “emergent simulation capabilities” demonstrated by the Sora model when trained on a large scale. These capabilities allow Sora to simulate some aspects of people, animals, and the environment in the physical world. These capabilities are not driven by clearly defined three-dimensional object characteristics or other inductive characteristics in the model, but are more emergent phenomena brought about by the scale and complexity of the model parameters.

Specifically, Sora’s demonstrated capabilities include:

- 3D Consistency : Sora is able to generate videos with dynamic camera movement, with characters and scene elements moving consistently in 3D space as the camera moves and rotates.

- Long-range coherence and object persistence : In video generation, maintaining temporal continuity is a challenge. Sora is able to maintain the presence of people, animals or objects even when they are occluded or out of frame, and generates multiple shots of the same character in a single sample, maintaining their appearance throughout the video.

- Interacting with the world : Sora is able to simulate simple actions, such as a painter leaving new brushstrokes on a canvas that persist over time, or a person eating a burger and leaving bite marks.

- Simulating the digital world : Sora is also able to simulate artificial processes, such as in video games. It controls players in Minecraft through basic strategy and renders the world and its dynamics with high fidelity. These capabilities indicate that if the Sora model continues to scale and improve, it has the potential to become a simulator capable of highly simulating the physical and digital worlds. This means that highly realistic virtual reality worlds like those in the science fiction movie “The Matrix” may appear in the future. With the advancement of technology, these scenes that once existed only in science fiction works may no longer be far away.

Technical Difficulties

“Big” model, “high” computing power, “massive” data