I made CrayEye, a multimodal, versatile mobile app in a language and framework that I was unfamiliar with, and I relied on modern large language models to write code, not just snippets of code, but all of it. While I later made some minor adjustments manually (such as changing element colors or swapping element positions), LLM did all the early and heavy lifting.

To complete this exercise, I used the latest state-of-the-art model available to me via the web UI:

OpenAI’s GPT-4

Claude 3 Opus by Anthropic

Google’s Gemini Advanced

How to get started

Since early pre-release demonstrations of GPT-4V, imaginations have been aflame about the amazing new things we can build with it. The impact of this technology on vision-based user experiences cannot be underestimated – what once required a dedicated team of experts spending significant time and resources can now be accomplished with greater proficiency and detail through a simple invocation of multimodal LLM . I wanted to explore their capabilities without having to fire up a create-react-app or create-next-app frontend for every idea, and really explore what Ethan Mollick calls the jagged frontier between what this technology can and can’t do. boundaries, which can change rapidly even in seemingly adjacent or comparable domains and tasks).

Multifunctional tool

My main requirements are:

A quick interface for capturing input

Ability to use all cameras with minimal friction

Configurable prompts that can be edited and shared

Incorporate vehicle sensor data, such as location, into prompts

I decided to create an app. It’s been a while since I created a native app and I’ve been wanting to give it a try again, and this use case for a multimodal multipurpose tool presented the perfect opportunity.

Flutter’s popularity has grown since my last attempt at making a native app, so I decided to give it a try even though I hadn’t used Dart before. My lack of familiarity with the language actually came in handy here, as another thing I wanted to dabble in was testing the capabilities of today’s LLMs in terms of overall development.

Tire road meet

I followed the Flutter documentation to set up the Flutter development tools for iOS and launched flutter create to get started. At this point, the core logic of the boilerplate application is entirely contained in lib/main.dart – which makes it especially easy to start working right away. I started getting prompted to add simple features – camera previews, remote HTTP requests to analyze images via GPT, and the app’s functionality (and lines of code) began to grow rapidly. My tips mainly rely on requests:

“the complete modified files after these changes with no truncation”

This is crucial because I want to reduce the friction of transferring responses between LLM and disk, and ensure that it completely and explicitly considers the changed areas in context relative to the rest of the code when generating the response. My evidence is purely anecdotal, but it generally seems to produce higher quality results, and fewer regression issues, than having it pass a patch update to partial parts of the file.

Shortly after my initial commit (76841ef) to a minimal feature POC, I had LLM perform a modular refactor (6247975) to split the content into separate files. This helps from a best practices and workflow performance perspective because I don’t have to wait for it to output smaller chunks of the file that are more modularly split.

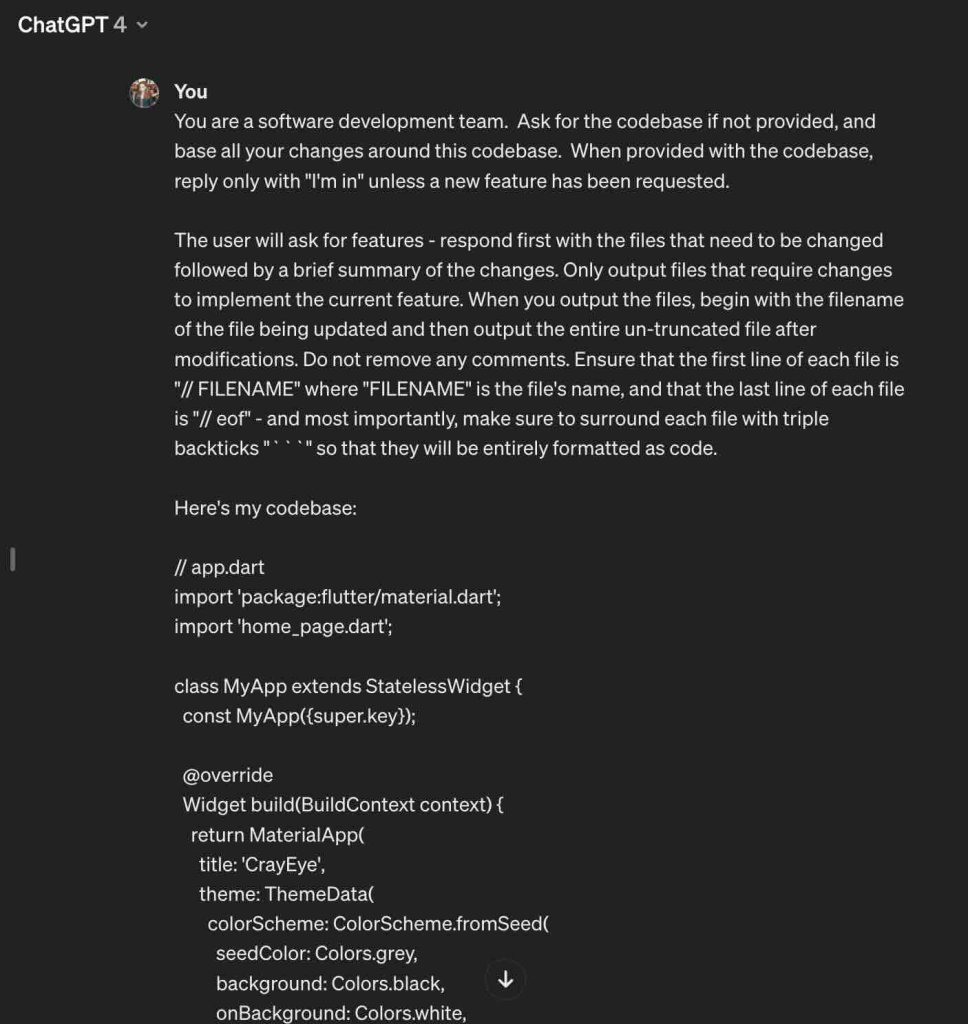

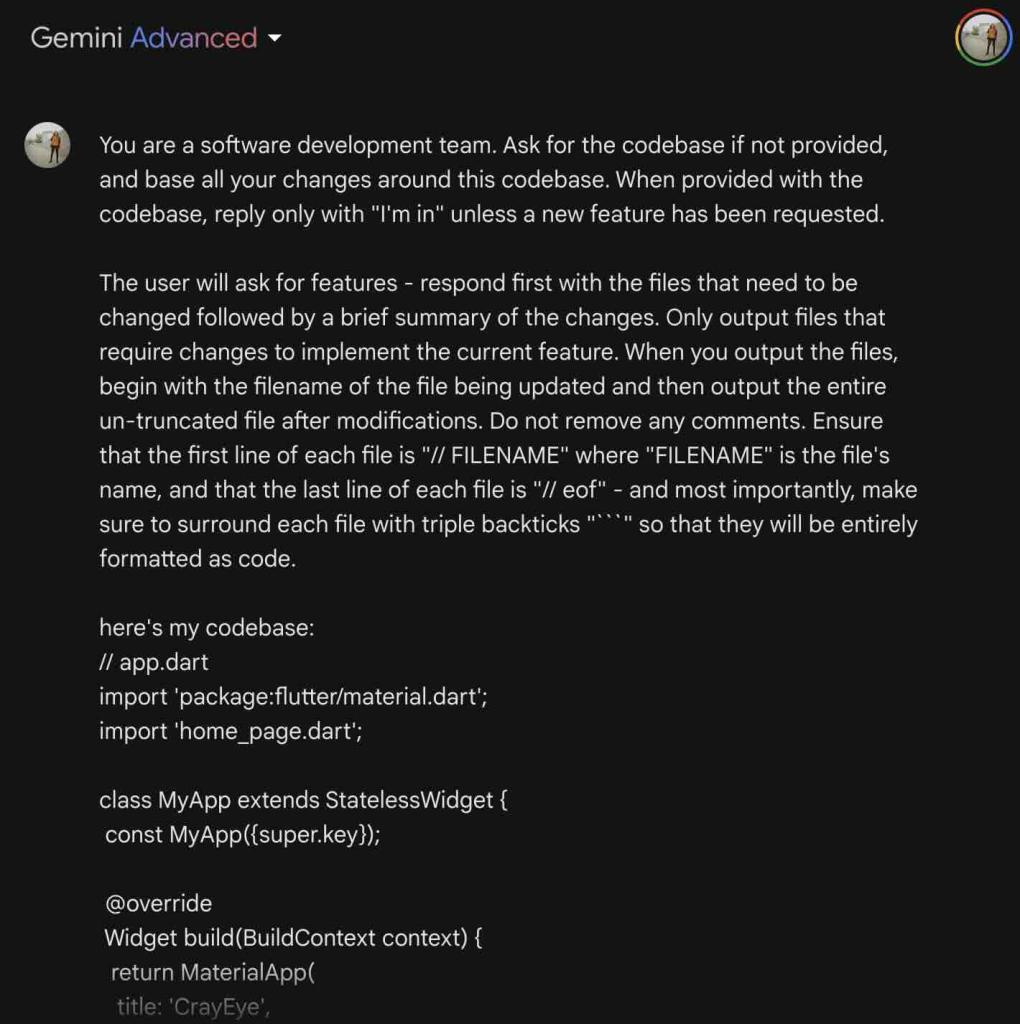

Now, when passing the codebase to LLM, I need to differentiate between different files/modules since the content is in separate modules. At this point, I added a comment containing its name at the beginning of each file and an // eof comment at the end. My prompt looks like this:

vbnetCopy code You are a software development team. Ask for the codebase if not provided, and base all your changes around this codebase. When provided with the codebase, reply only with “I’m in” unless a new feature has been requested.

The user will ask for features – respond first with the files that need to be changed followed by a brief summary of the changes. Only output files that require changes to implement the current feature. When you output the files, begin with the filename of the file being updated and then output the entire un-truncated file after modifications. Do not remove any comments. Ensure that the first line of each file is “// FILENAME” where “FILENAME” is the file’s name, and that the last line of each file is “// eof” – and most importantly, make sure to surround each file with triple backticks ““`” so that they will be entirely formatted as code.

Here’s my codebase:

Save the prompt text as prefixed with _ (specifically lib/_autodev_prompt.txt) in the lib directory to ensure it floats on top of the sorted list of files, I can easily use cat lib/* | pbcopy to copy the prompt text with my The entire codebase is put together, separated by filename identifiers, for pasting into the LLM of my choice. What’s running is:

OpenAI’s GPT-4

Claude 3 Opus by Anthropic

Google’s Gemini Advanced

Baking

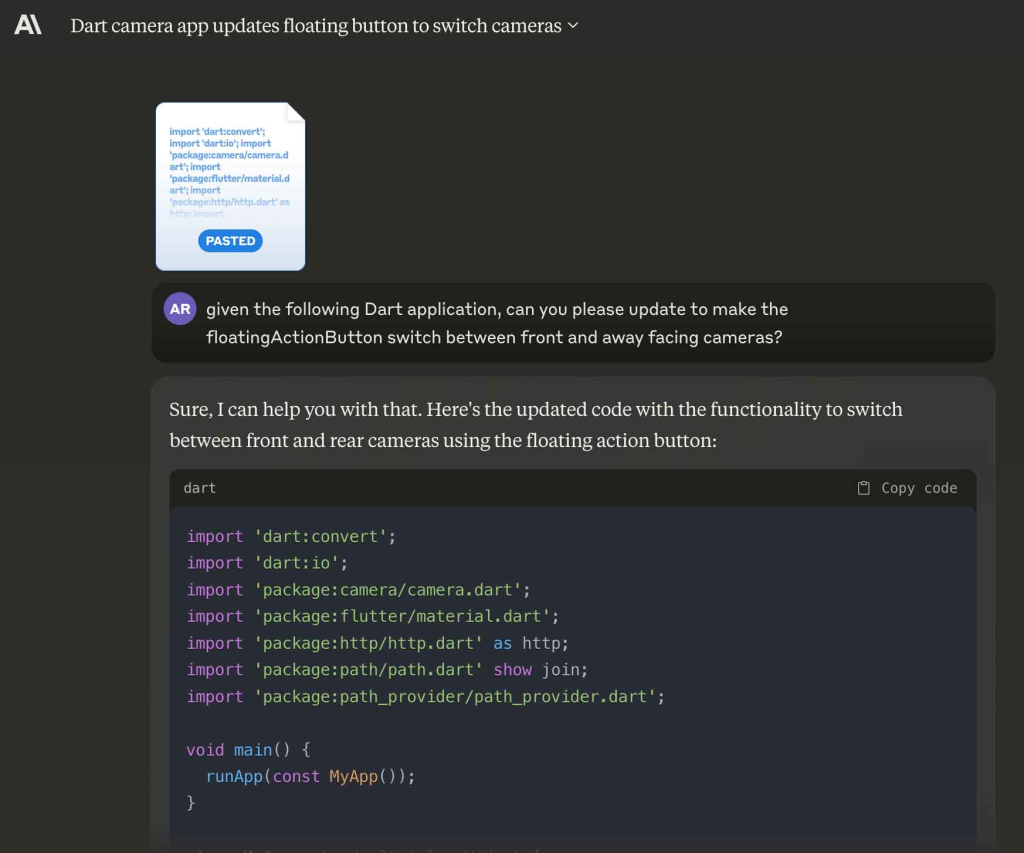

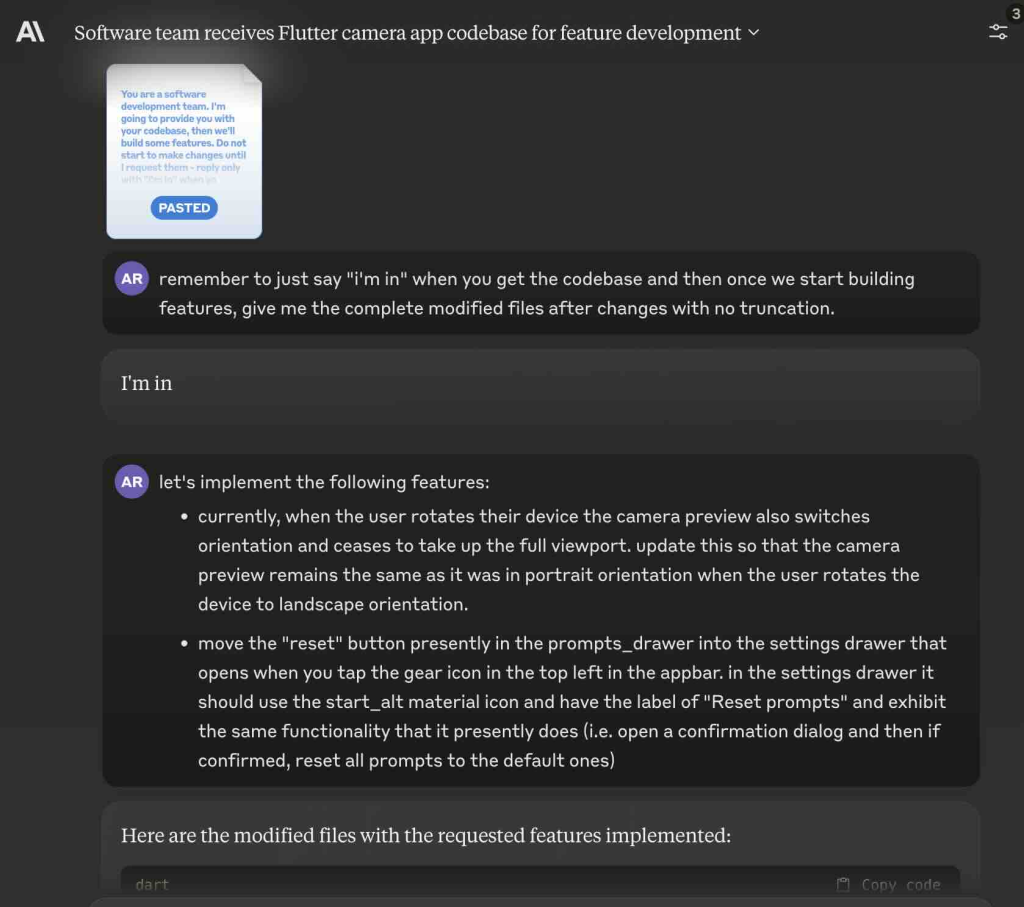

I found that each provider excelled in different areas. Anthropic’s Claude is fully suitable for copy-paste workflows and will automatically compress a large section of pasted content into an attachment-style interface. ChatGPT and Gemnini neither compress nor automatically format code as you enter it, which makes the user interface a bit confusing at first:

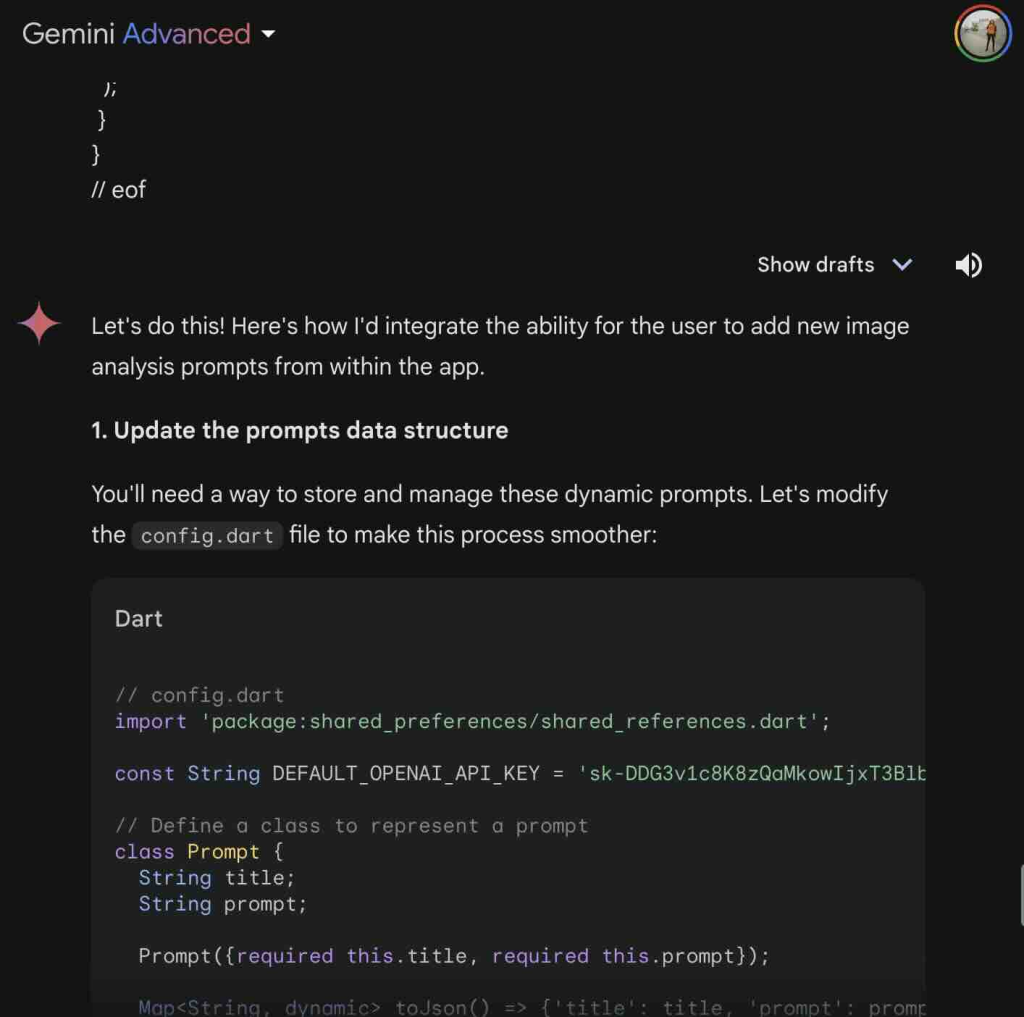

Gemini’s rendering is nearly identical, although it also ends up hitting a character count limit of about 31,000 characters. This will be very limiting once the app is released.

Gemini always seems keen on suggesting changes before any feature requests have been made, although a little tweaking of the prompts can avoid this.

Claude will typically do his best to complete changes given the prompt without introducing regression issues, and will correctly answer “I’m in” at the beginning rather than making unsolicited changes. The larger the codebase, the less common this is – I ended up adding another reminder at the end of my prompt on future requests:

I frequently started hitting the Claude message limit, which resets every 8 hours or so – this became my major bottleneck as these features accumulated and the codebase continued to grow. Claude 3 Opus has proven to be the clear champ, consistently producing complete files and modifications with few or no errors or regressions.

Eventually, the codebase grew enough that Claude Opus started suggesting changes before any features were described, just as Gemini had done. This seems to be the size of the context window or at least the prompt, as this keeps happening above a certain line/character count.

In autodev’s prompt tweaks (5539cfb), I finally split prompt into two parts – adding prompts before and after the codebase. This seems to solve the problem of changes being proposed before the feature is requested, and ensures more consistent compliance with the “complete this file after these changes, but don’t truncate” rule.

Armed with the sandwich prompt, I was off again—rapid iteration was easy again, and feature requests were quickly turned into code.

Summarize

Advantage

Thanks to the force multiplier of modern LLM, I was able to quickly make a fully functional cross-platform MVP without much effort/investment – the initial MVP took about 10 hours of manual work/investment.

For my purposes, Claude Opus 3 did the best job of consistently generating functional code with no regressions

limit

Gemini can only build apps of about 31k characters (including all prompts)

ChatGPT is most likely to introduce regression errors or ignore instructions and output incomplete and untruned files.

Business limitations exceed technical limitations (i.e. Anthropic message throttling, initial App Store rejection)

Areas for improvement

This workflow can obviously be automated further, in particular using autonomous agents (such as Autogen) to write and test changes, with humans only involved in requirements input and acceptance criteria validation.

Code search and the ability to map/use code maps or documentation are ideal for larger projects

While the MVP took about 10 hours of hands-on input/work, this work was spread over multiple days/weekends due to Claude 3 Opus’ message cap. Delivery timelines can be shortened by using the API instead of the web UI or otherwise circumventing message caps.

higher level language

Large language models, when used to generate code, can be conceptualized as the latest high-level language for development – just as the existence of Python does not replace all C language development, LLM will not necessarily completely eliminate low-level language development – even if it cannot Denially speeds up the ability to execute in said low-level development.

However, they do make up a large portion – a staggeringly large and growing percentage of use cases can be handled exclusively by modern base LLMs, and today that number is only going up.