Over the past week, the world witnessed some of the most groundbreaking advances in artificial intelligence from two tech giants. OpenAI launched Sora, an amazing AI video generator, and Google launched Gemini 1.5a model that can support up to 1 million Token contexts.

Gemma, is a lightweight, state-of-the-art open source model family built on the research and technology used to create Gemini models.

What is Gemma

Gemma is named after the Latin gemma, meaning gemstone . Gemma draws inspiration from its predecessor, Gemini, reflecting its value and rarity in the technology world.

They are text-to-text, decoder-only large-scale language models available in English, with open weights, pre-trained variants, and instruction-tuned variants.

Gemma is already available globally in two sizes 2Band 7Bsupports a variety of tools and systems, and can run on developer laptops and workstations.

2 model sizes and features

Gemma models are available with 20 billionand 70 billionparameter sizes. The 2B model is designed to run on mobile devices and laptops, while the 7B model is designed to run on desktop computers and small servers.

Adjust model

Gemma also comes in two versions: tuned and pre-trained.

- Pre-trained : base model without any fine-tuning. The model was not trained for any specific task or instruction outside of the Gemma core data training set.

- Command adjustments : Fine-tuned for human language interaction, improving its ability to perform target tasks.

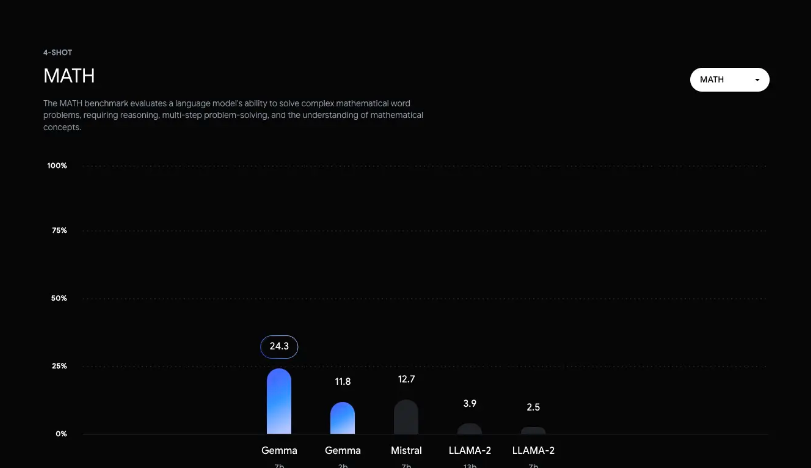

Compared to competitors?

Because Gemma is small, it can run directly on the user’s laptop. The figure below shows how the language understanding and generation performance of Gemma (7B) compares to similarly sized open models such as LLaMA 2 (7B), LLaMA 2 (13B) and Mistral (7B).

A more detailed comparison of each benchmark can be viewed here .

What’s new in Gemini 1.5?

Gemini 1.5Significant enhancements are provided to address the shortcomings of the initial release:

1,000,000Token context: This is currently the largest context in any large base model. OpenAI’s GPT-4 has128Kcontext.- There will be faster responses: Google is adopting an expert hybrid MoE architecture that may power GPT-4, enabling models to break prompts into subtasks and route them to dedicated “experts,” significantly improving efficiency and performance .

- Fast information retrieval: New models demonstrate significant improvements in the ability to pinpoint specific details in large amounts of text, video, or audio data.

- Get better at coding: Large context enables in-depth analysis of the entire code base, helping Gemini models grasp complex relationships, patterns, and understanding of code.

What can it be used for?

Here are some possible usage scenarios for Gemma:

Content creation and dissemination

- text generation

- Chatbots and Conversational AI

- text summary

research and education

- Natural Language Processing (NLP) Research: serves as the foundation for NLP research, experimenting with techniques, developing algorithms, and contributing to the advancement of the field.

- Language learning tools: Enable an interactive language learning experience, help with grammar correction, or provide writing practice.

- Knowledge Exploration: Help researchers explore large volumes of text by generating summaries or answering questions about specific topics.

Tasks that previously required very large models can now use state-of-the-art, smaller models. This opens up a whole new way of developing AI applications, and it will soon be possible to see in-device AI chatbots on smartphones – no internet connection required.

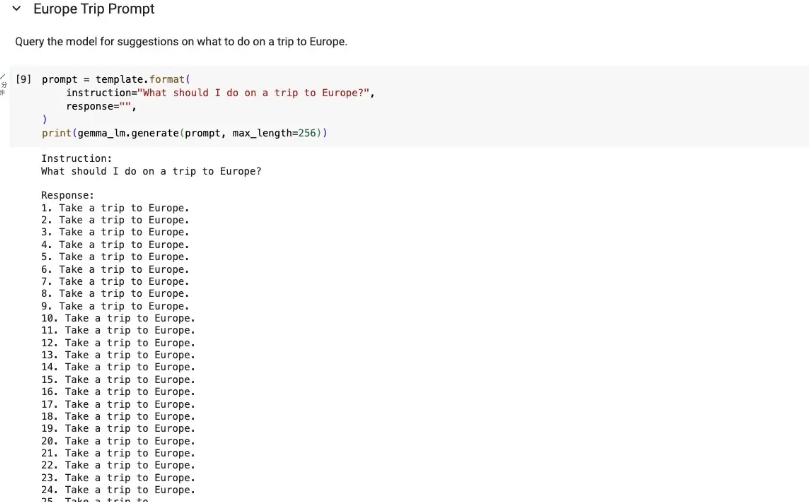

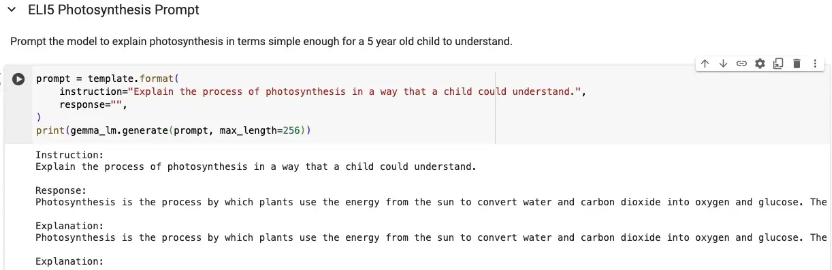

try to start

Gemma models can be downloaded and run from Kaggle Models .

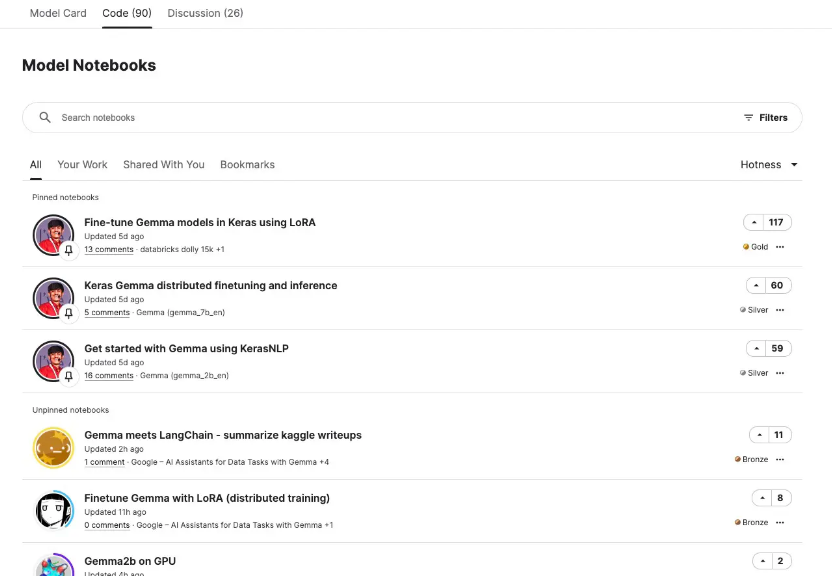

After entering the page, select the Code tab:

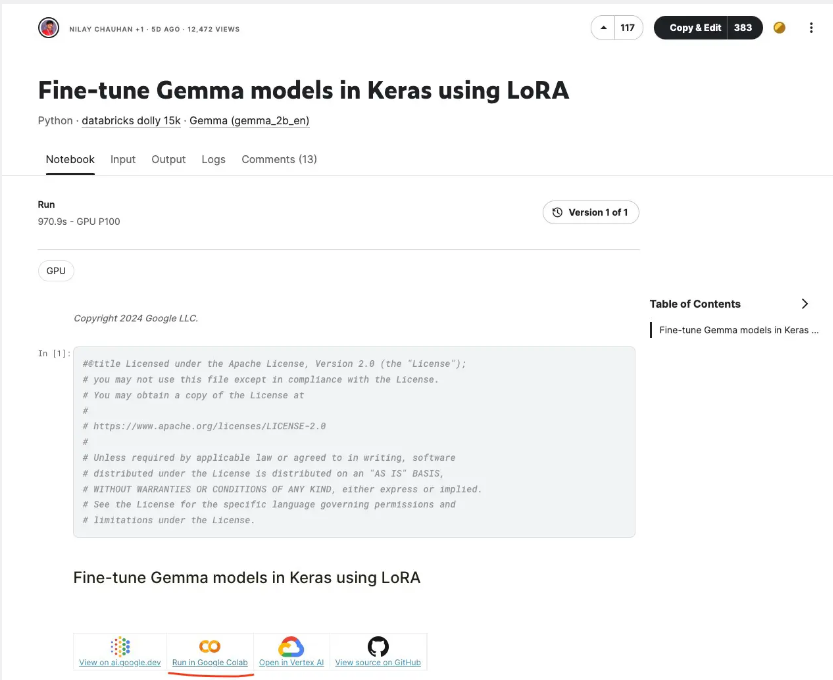

Just choose one and click in. Here, choose the first one:

Select Run in Google Colab .

Summarize

While Gemma models may be small and lack complexity, they make up for it in speed and cost of use.

From a larger perspective, Google is not chasing the immediate excitement of consumers, but cultivating the market for enterprises. Imagine that when developers use Gemma to create innovative new consumer applications, companies pay for Google Cloud services.