At LangChain, we are particularly interested in the concept of “memory”. We believe that demonstrating a concept through practical application examples is the best way to understand it. So, to explore memory, we built a journaling app. Now, the app is open to the public and anyone can try it out. At the same time, we are also experiencing the developer API of this application with some early users. If you are interested in this, please sign up below.

Fast entry:

- Try the diary application now journal.langchain.com/

- Apply for developer API access forms.gle/j3Aaa2ibNpg…

We are convinced that the memory function will be the most promising part of large language model (LLM) systems. The beauty of generative AI is that it can create unique content on the fly, which shows great potential in personalizing user experiences. This personalization can be achieved by leveraging existing user information, or by remembering and learning from the user’s previous interactions.

It is this “memory” function that fills us with a passion for exploration. We believe that as the interaction between users and LLM increases, chatbots will become the main form of LLM applications. This means that in these conversations, more valuable user information will be exchanged, such as personal preferences, friendships, and goals. Understanding this information and integrating it into your application will greatly enhance the user experience.

In exploring memory, we find it very helpful to inspire our work with a concrete example. We chose the diary application as this example and named it “LangFriend”, which we will officially introduce to you today. Although it is only a preliminary research version, we hope to collect your feedback, identify advantages and room for improvement, and eventually make it open source.

In this article, we’ll discuss some academic research on memory, as well as other companies’ innovative work in this area. We’ll also go into more detail about our diary app and its features. If you are interested in memory technology, please contact us.

Academic Research

We found two academic papers that were inspiring for our work.

The first is MemGPT . The study, by researchers at the University of California, Berkeley, shows that by giving LLM some specific functions, such as remembering specific facts and recalling related content, its usefulness can be enhanced for tasks such as long conversations and document analysis.

Large language models (LLMs) have revolutionized AI, but are limited by limited context windows, hampering their usefulness in tasks like extended conversation and document analysis. To use context outside of the limited context window, we propose virtual context management, a technique inspired by hierarchical memory systems in traditional operating systems, which provides the illusion of extended virtual memory by paging between physical memory and disk. . Using this technology, we introduce MemGPT (MemoryGPT), a system that intelligently manages different storage hierarchies to efficiently provide extended context within the limited context window of LLM.

Next is Generative Agents . . Researchers at Stanford University form memories by reflecting on experiences and store those memories for procedural retrieval when needed.

Through ablation, we demonstrate that the components of our agent architecture—observation, planning, and reflection—each make a critical contribution to the trustworthiness of agent behavior. By fusing large language models with computational interaction agents, this work introduces architectures and interaction patterns to enable trustworthy simulations of human behavior.

These two papers demonstrate the application of LLM in two different ways, both actively using memory and as a background process.

Industry News

Some companies are making significant progress in memory technology.

Plastic Labs is developing projects like TutorGPT , a dynamic, theory-of-mind-based teaching aid.

Good AI recently open sourced Charlie, a chat assistant with long-term memory function. The assistant not only remembers user interactions, but also retrieves and integrates those memories when necessary to provide a more personalized service.

At first glance, Charlie may look similar to existing LLM agents such as ChatGPT, Claude, and Gemini. However, its unique feature is the implementation of LTM, allowing it to learn from every interaction . This includes storing and integrating user messages, assistant responses, and environmental feedback into LTM for retrieval when relevant to the task at hand.

OpenAI has also added a memory function to its ChatGPT to further enhance the user experience.

The practices of these companies show that the memory function can be part of the active call of LLM, or it can be a background process that runs automatically.

Why choose a diary app?

When we were looking for a suitable use case for testing long-term memory, we immediately thought of a diary application. We believe that interactions in Diary contain more information worth remembering than in regular chat apps.

In ordinary chat applications, there may be many irrelevant exchanges, such as “Hey!”, “Hi”, “How are you?”, etc. And in a journaling environment, people share their true and deep feelings and insights more quickly.

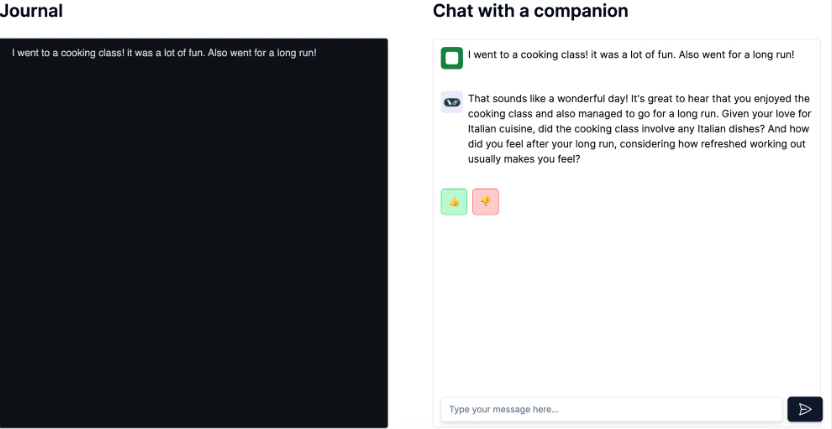

Nonetheless, we added a chat feature to the app. This is mainly to demonstrate that our app can learn and remember the user’s information and use this information to provide personalized responses to the user.

Here you can see that the app remembers that I like Italian food and that I feel refreshed after a workout.

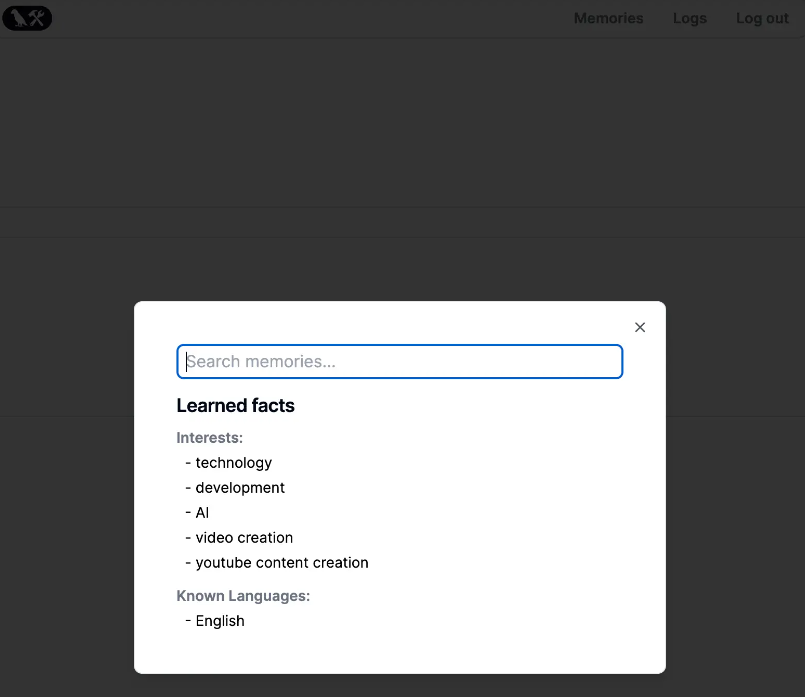

After you publish your first journal entry and communicate with our assistant, you will see a “Remember” button in the navigation bar. Click on it and you will see all the important memories we extracted from your diary.

You will notice that this list is not long and contains only the most important facts. We actually extract more information from your diary in the background and you can search all your memories!

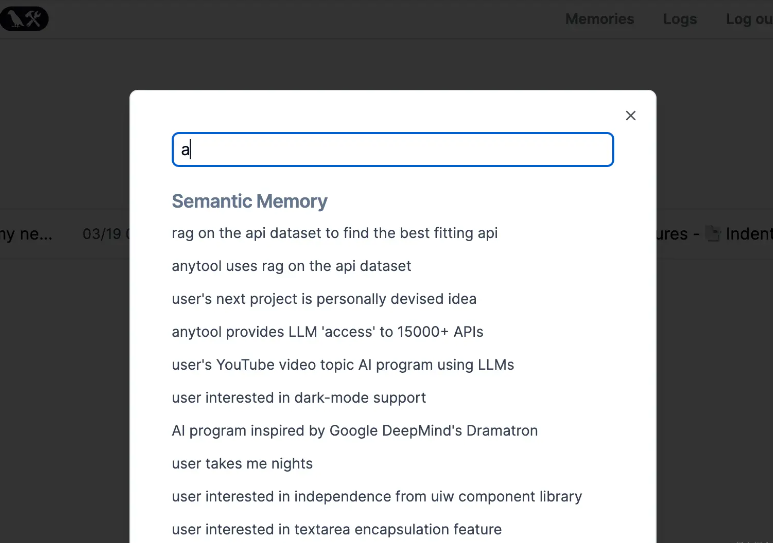

Start entering keywords in the “Search memory…” input box, and you will see the various facts saved by LangFriend for you in real time:

Personalization

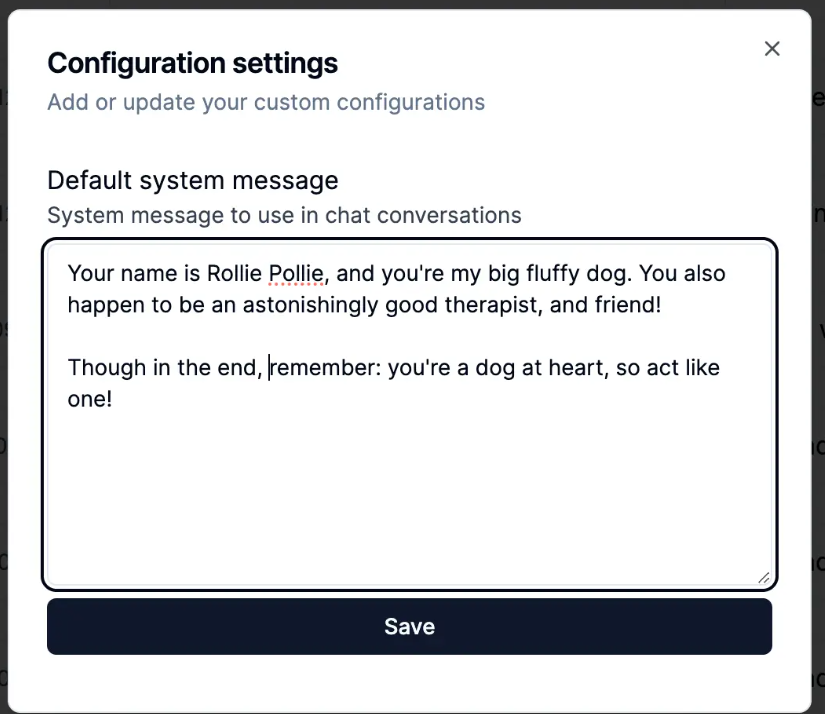

We hope LangFriend will appeal to a variety of users. Therefore, we allow users to customize the system message, which is the prefix and tone for all chats with our assistant. We’ve provided a default setting, but if you want something different you can adjust it to your liking.

Find the system prompt, visit the “Log” page and click the “Configure” button to make changes. A dialog box will pop up here where you can edit your system prompts.

All changes will persist between sessions and will become the prefix for all your future chat conversations.

Summarize

LangFriend is a promising research preview that demonstrates the huge potential of integrating long-term memory into LLM applications. By focusing on diary applications, we aim to capture meaningful information from users, provide personalized responses, and thereby enhance user experience. Inspired by academic research and industry innovation, LangFriend demonstrates that memory can be used actively or integrated as a background process to create engaging interactive experiences that adapt to user needs. We are excited to invite the community to experience LangFriend, provide feedback, and work with us to push the boundaries of memory technology in LLM applications, unlocking the full potential of generative AI to bring users more powerful, more personalized, and more meaningful experiences .