introduction

With the rapid development of artificial intelligence technology, the concept of Agent has entered the real world from science fiction. Agent can be understood as a software entity with certain intelligence that can autonomously perform tasks, make decisions and interact with other systems.

During the operation of the AI technology public account, we received a lot of inquiries about Agent development. Therefore, this article will analyze the evolution of these three stages from a technical perspective, and provide a code demo case for each of them , for novices. The programmer provides a guide for Agent development.

- Demo address: github.com/q2wxec/lang…

Use it as an API that understands semantics

In the initial stage of Agent development, we can think of the large language model as an API that can understand natural language . This means that we can send a request to the Agent just like calling any traditional API and expect to get an understood and processed response.

Most of the agents developed at this stage embed LLM capabilities in traditional software business processes to enhance software functions. The use of LLM is limited to its ability to understand natural language. The application scenarios are mostly limited to text generation and summary. There is no obvious difference from the traditional software development model of calling APIs of various tools. Typical use cases are as follows:

Short video copywriting creation

We can use the Agent as a content generator, input the key information of the video, and the Agent can generate attractive copy based on this information. This process can be seen as using Agent as a text generation API.

News daily summary

Another typical application scenario is the automatic summary of news content. Agent can receive a large amount of news data, and then use natural language understanding capabilities to extract key information and generate a concise daily news report. Combined with web information acquisition tools, I made a simple news summary demo based on LangChain , which can be found on github.

At this stage, the development of Agent mainly focuses on how to better understand and process natural language. Developers in the field of AI only need to be familiar with the basic principles of natural language processing (NLP). The most commonly used method in AI engineering is limited to prompt word optimization , and they can use existing AI models to implement this function.

Used as a natural language programming tool

The transition from APIs to programming tools

In the second stage, Agent is no longer just an API for understanding language, but a tool that can perform natural language programming. This means that developers can use natural language to guide agents to complete more complex tasks.

The agents developed at this stage are no longer limited to text understanding scenarios. Through prompt word engineering, function_calling and other methods, the large language model can format the output content according to prompt requirements, which can reshape many aspects of the traditional software business process to a certain extent. It can be said to be natural language programming applications through LLM. Typical use cases are as follows:

Data table summary and deduplication

In this scenario, the Agent receives multiple data tables, then automatically summarizes and deduplicates the data through natural language prompts, and finally outputs a neat summary table. Using LangGraph’s process orchestration, I implemented a data table summary demo that combines data processing, filtering, deduplication, and summary. See github.

Automate business processes

Through AI execution intention recognition , Agent can reduce manual review links in certain business processes and automatically identify and promote the execution of the process.

In order to realize the functions at this stage, developers need to master technologies such as prompt word engineering and function_calling. These technologies allow developers to guide the behavior of agents through natural language so that they can perform specific tasks as expected.

Use it as a real intelligence

In the third stage of Agent development, Agent is truly used as an intelligent agent. At this time, Agent is no longer just a simple API or tool, but has become an intelligent partner with independent decision-making capabilities.

The development of agents at this stage is roughly the same as what everyone understands as AI native application development (although there is no standardized and unified definition of AI native application), but the way of thinking of application development at this stage is indeed completely different from that of traditional software development. LLM is truly used as intelligence.

When developing software, what may be more important to consider is that if this thing is executed by a human team,

- Which roles are needed (prompt word Role qualifier)

- What skills are required for these related roles (tools binding)

- How teams should interact with each other (state settings)

- How work flows between teams (workflow settings)

It can be seen that at this stage, LLM no longer exists independently as an API, but is bound to roles and skills, and requires the combination of multiple agents, which is consistent with the way human intelligence is used. Typical use cases are as follows:

Plan-and-Execute

Plan-and-Execute refers to a process involving two main steps: planning and execution. Agents need to autonomously generate plans based on given goals and automatically execute tasks based on the plan steps. This requires Agents to understand plans, formulate strategies, and execute tasks. Ability.

For example, if the given task is “Plan a trip from Beijing to Shanghai”, then the “planning” step of the large language model may include determining travel dates, choosing transportation methods, booking accommodation and activities, etc., while the “execution” step is May involve generating detailed itinerary and necessary booking steps.

The author combined search and automatic question and answer tools and used Plan-and-Execute to create a search and question and answer enhanced demo, which can be found on github.

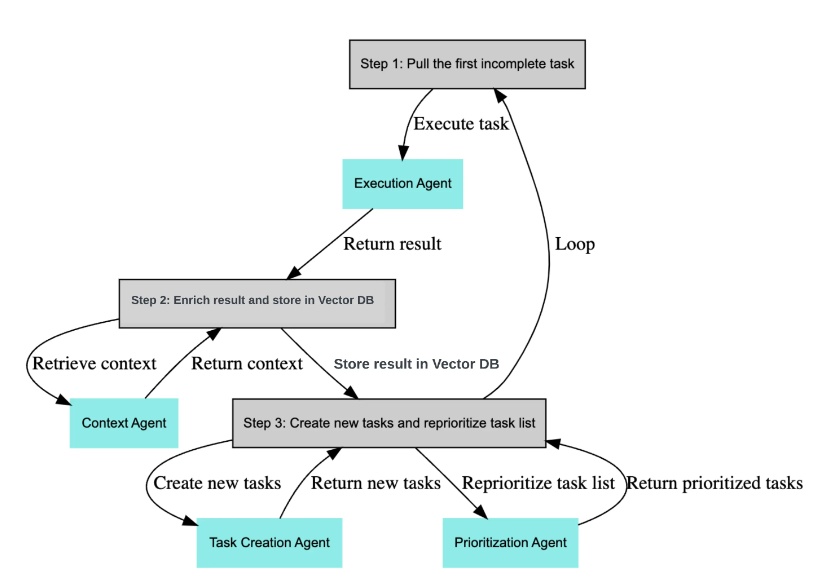

Example 2: BabyAgI

BabyAGI is an AI-powered task management system created by developer Yohei Nakajima. It leverages OpenAI and Pinecone APIs to create, prioritize and execute tasks

At this stage, developers need to consider how to combine the Agent with the way human teams work. This involves many aspects such as role definition, skill binding, status settings, and workflow settings.

Conclusion

The three realms of Agent development represent the evolution of AI technology from simple application to deep integration. With the continuous advancement of technology, we have reason to believe that Agent will play an increasingly important role in future software development. For programmers, understanding and mastering these three stages of Agent development will help them better adapt to future technology development trends.